Beta API for model pipelining with multiple Edge TPUs

Tested by the Coral Team

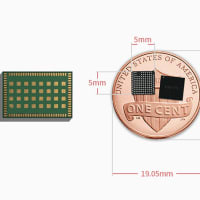

We've just released an updated Edge TPU Compiler and a new C++ API to enable pipelining a single model across multiple Edge TPUs. This can improve throughput for high-speed applications and can reduce total latency for large models that cannot fit into the cache of a single Edge TPU. To use this API, you need to recompile your model to create separate .tflite files for each segment that runs on a different Edge TPU.

Here are all the changes included with this release:

The Edge TPU Compiler is now version 2.1.

You can update by running sudo apt-get update && sudo apt-get install edgetpu, or follow the instructions here.

You can update by running sudo apt-get update && sudo apt-get install edgetpu, or follow the instructions here.

The model pipelining API is available as source in GitHub. (Currently in beta and available in C++ only.) For details, read our guide about how to pipeline a model with multiple Edge TPUs.

New embedding extractor models for EfficientNet, for use with on-device backpropagation.

Minor update to the Edge TPU Python library (now version 2.14) to add new size parameter for run_inference().

New Colab notebooks to build C++ examples.

Send us any questions or feedback at cynthia@gravitylink.com

※コメント投稿者のブログIDはブログ作成者のみに通知されます